[title]项目简介[/title]

实验目的

通过利用BeautifulSoup、Re、XPath分别爬取瓜子车二手网的信息,使学生能够熟练掌握这三种方式爬取信息,并对他们之间的性能有一个清晰的认知。

实验要求

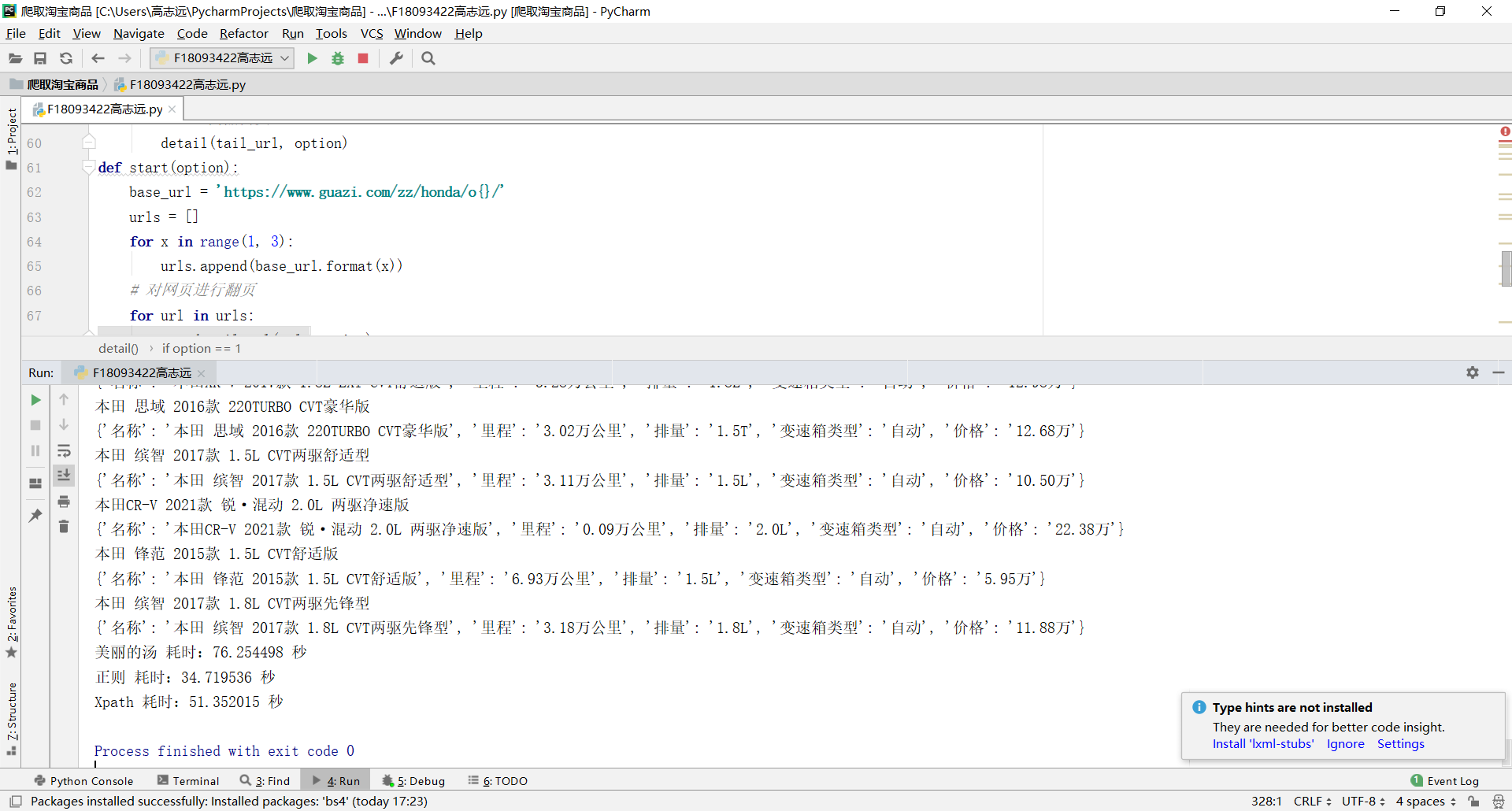

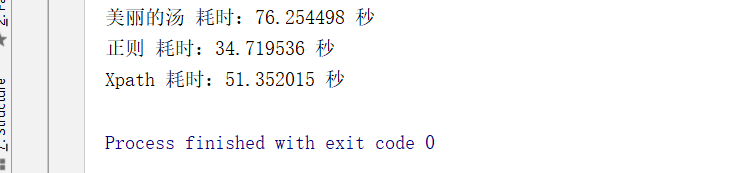

爬取瓜子车二手网的信息(https://www.guazi.com/zz/honda/),爬取该网址的前三页,爬取的具体信息包括车的名称,里程,排量,变速箱类型,价格。不需要显示具体爬取的内容,需要在控制台输出每种爬取方式所花费的时间,如下图所示:

[title]代码[/title]

import re

import time

from lxml import etree

import requests

from bs4 import BeautifulSoup

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36',

'Cookie': 'uuid=9c698df2-3dd4-4c2b-b50e-54ba5f2df369; clueSourceCode=%2A%2300; ganji_uuid=5880555117435732631406; sessionid=ccba7bc1-01c8-496c-b142-05759c1d8a11; cainfo=%7B%22ca_a%22%3A%22-%22%2C%22ca_b%22%3A%22-%22%2C%22ca_s%22%3A%22self%22%2C%22ca_n%22%3A%22self%22%2C%22ca_medium%22%3A%22-%22%2C%22ca_term%22%3A%22-%22%2C%22ca_content%22%3A%22-%22%2C%22ca_campaign%22%3A%22-%22%2C%22ca_kw%22%3A%22-%22%2C%22ca_i%22%3A%22-%22%2C%22scode%22%3A%22-%22%2C%22keyword%22%3A%22-%22%2C%22ca_keywordid%22%3A%22-%22%2C%22display_finance_flag%22%3A%22-%22%2C%22platform%22%3A%221%22%2C%22version%22%3A1%2C%22client_ab%22%3A%22-%22%2C%22guid%22%3A%229c698df2-3dd4-4c2b-b50e-54ba5f2df369%22%2C%22ca_city%22%3A%22luoyang%22%2C%22sessionid%22%3A%22ccba7bc1-01c8-496c-b142-05759c1d8a11%22%7D; _gl_tracker=%7B%22ca_source%22%3A%22-%22%2C%22ca_name%22%3A%22-%22%2C%22ca_kw%22%3A%22-%22%2C%22ca_id%22%3A%22-%22%2C%22ca_s%22%3A%22self%22%2C%22ca_n%22%3A%22-%22%2C%22ca_i%22%3A%22-%22%2C%22sid%22%3A48585233536%7D; lg=1; antipas=G9H7067F669301065859320o10KT; cityDomain=zz; user_city_id=103; preTime=%7B%22last%22%3A1593580830%2C%22this%22%3A1592458154%2C%22pre%22%3A1592458154%7D'

}

# 在商品内页中操作

def detail(detail_url, option):

url = detail_url

res = requests.get(url, headers = headers)

if option == 1:

beautiful(res)

elif option == 2:

re_fun(res)

elif option == 3:

xpath(res)

def xpath(res):

html = res.text

selector = etree.HTML(html)

name = selector.xpath('/html/body/div[4]/div[3]/div[2]/h1/text()')[0].strip()

print(name)

mileage = selector.xpath('/html/body/div[4]/div[3]/div[2]/ul/li[2]/span/text()')[0]

cc = selector.xpath('/html/body/div[4]/div[3]/div[2]/ul/li[3]/span/text()')[0]

gearbox = selector.xpath('/html/body/div[4]/div[3]/div[2]/ul/li[4]/span/text()')[0]

price = selector.xpath('/html/body/div[4]/div[3]/div[2]/div[1]/div[2]/span/text()')[0]

res = {'名称':name, '里程':mileage, '排量':cc, '变速箱类型':gearbox, '价格':price}

print(res)

def re_fun(res):

name_temp = re.findall('<h2 class="titlediv" id="base"><span>(.*?)</span></h2>', res.text)[0]

name = name_temp.split(' ')[0]

mileage = re.findall('<span>(.*?)</span>', res.text)[0]

cc = re.findall('<span>(.*?)</span>', res.text)[1]

gearbox = re.findall('<span>(.*?)</span>', res.text)[2]

price = re.findall('<span class="price-num">(.*?)</span>', res.text)[0]

res = {'名称':name, '里程':mileage, '排量':cc, '变速箱类型':gearbox, '价格':price}

print(res)

def beautiful(res):

soup = BeautifulSoup(res.text, 'html.parser')

name_temp = soup.select('.titlebox')[0].get_text()

name = name_temp.split('\r\n')[1].strip()

mileage = soup.select('div.product-textbox > ul > li.two > span')[0].get_text()

cc = soup.select('div.product-textbox > ul > li.three > span')[0].get_text()

gearbox = soup.select('div.product-textbox > ul > li.last > span')[0].get_text()

price = soup.select('div.product-textbox > div.pricebox.js-disprice > div.price-main > span')[0].get_text()

res = {'名称': name, '里程': mileage, '排量': cc, '变速箱类型': gearbox, '价格': price}

print(res)

# 第1页 ... 第n页 分别处理

def get_datail_url(url, option):

res = requests.get(url, headers=headers)

# 使用正则解析内页url,获取第n页中的所有内页信息

res.encoding = res.apparent_encoding

tails = re.findall('href="(.*?)" target="_blank" class="car-a"', res.text)

# 拼接

for tail in tails:

tail_url = 'https://www.guazi.com' + tail

# 进入商品内页

detail(tail_url, option)

def start(option):

base_url = 'https://www.guazi.com/zz/honda/o{}/'

urls = []

for x in range(1, 3):

urls.append(base_url.format(x))

# 对网页进行翻页

for url in urls:

get_datail_url(url, option)

if __name__ == '__main__':

print("正在运行... ... 如果小于1秒,说明程序有误")

time_container = []

start_time = time.time()

start(1)

end_time = time.time()

time_container.append(end_time-start_time)

start_time = time.time()

start(2)

end_time = time.time()

time_container.append(end_time - start_time)

start_time = time.time()

start(3)

end_time = time.time()

time_container.append(end_time - start_time)

print("美丽的汤 耗时:%lf 秒" % time_container[0])

print("正则 耗时:%lf 秒" % time_container[1])

print("Xpath 耗时:%lf 秒"% time_container[2])

[title]爬虫效果[/title]